Greetings,

Welcome to the latest edition of the State of AI. In this issue, we explore the latest pioneering advancements that continue to reshape our interaction with technology. Discover the Phi-3 Technical Report, showcasing a language model running locally on your phone, marvel at Groma’s approach for localizing visual tokenization in large language models, and experience the innovative PhysDreamer tool, enabling physics-based interactions with 3D objects through video generation. Additionally, we introduce the Reka Core, Flash, and Edge series, further expanding the capabilities of multimodal language models, and delve into TextSquare’s enhanced methods for text-centric visual instruction tuning.

Each highlighted breakthrough promises to deepen your understanding of AI’s growing influence across various domains. Get ready for another engaging and enlightening read.

Best regards,

Contents

Phi-3 Technical Report: A Highly Capable Language Model Locally on Your Phone

Groma: Localized Visual Tokenization for Grounding Multimodal Large Language Models

PhysDreamer: Physics-Based Interaction with 3D Objects via Video Generation

Reka Core, Flash, and Edge: A Series of Powerful Multimodal Language Models

TextSquare: Scaling up Text-Centric Visual Instruction Tuning

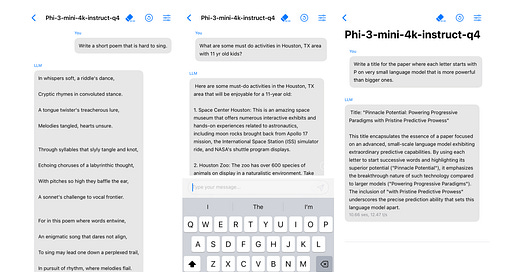

Phi-3 Technical Report: Deploying a Powerful Language Model on Your Phone

Authors: Group from Microsoft Research

Source and references: https://arxiv.org/abs/2404.14219

Introduction

The quest for more powerful and efficient artificial intelligence has led to the creation of 'language models,' sophisticated systems that understand and generate human-like text. Microsoft has now unveiled the phi-3-mini, a language model that packs the punch of top-tier AI into a device that fits in your palm—your smartphone. This revelation isn't just a step forward; it’s a giant leap for AI accessibility and utility.

The Advent of Miniature Giants

The phi-3-mini isn't just another model; it represents the pinnacle of using refined training data that allows a substantial reduction in model size while maintaining excellence in performance. Traditionally, the power of language models like GPT-3.5 was reserved for cloud computing environments due to their vast size. Microsoft's breakthrough demonstrates that with the right data and training techniques, these models can be scaled down efficiently to fit on local devices without a hefty trade-off in capability.

The Core Innovation: Data Optimization

The cornerstone of phi-3-mini’s success is the revolutionary approach to its training data. By enhancing the datasets used in its predecessor, phi-2, the team at Microsoft managed to create a model that learns more efficiently. This model uses a mingling of heavily filtered web data and synthetic data created by other language models. This not only provides a rich variety of quality data but does so in a way that dramatically reduces the quantity needed to achieve high performance.

Performance and Practicality

In real-world testing, phi-3-mini matches up admirably with giants in the field such as GPT-3.5 and Mixtral 8x7B, achieving impressive scores on standard benchmarks like MMLU and MT-bench. What makes this remarkable is the model’s ability to run on common smartphones due to its smaller size and optimized processing requirements. Imagine having the power of advanced AI right in your pocket, ready to assist with tasks from simple questions to complex problem-solving scenarios without needing an internet connection.

Beyond phi-3-mini

The research also delves into larger models—phi-3-small and phi-3-medium—indicating a scalable path that preserves these efficiencies at various tiers of computational ability. These models extend the capabilities of phi-3-mini, targeting devices with slightly more processing power while still being significantly more efficient than their predecessors.

Harnessing AI Ethically and Safely

Microsoft has not only focused on making AI more compact and capable but also safer and more aligned with ethical guidelines. The safety features built into phi-3-mini through rigorous post-training processes—including red-teaming and automated evaluations—emphasize Microsoft's commitment to responsible AI. This aspect is crucial as AI becomes more pervasive in everyday applications.

Conclusion: A Glimpse into the Future

The development of phi-3-mini is a watershed moment in the evolution of AI technology. By making powerful models accessible on handheld devices, Microsoft is democratizing AI, opening up countless opportunities for innovation in mobile applications. This development also signals a shift towards more sustainable AI, where power and size efficiencies become crucial metrics of technological advancement. As we move forward, the blend of capability, accessibility, and safety in AI will likely be the benchmarks against which future advancements are measured, reshaping our interaction with technology in everyday life.

Groma: Localized Visual Tokenization for Grounding Multimodal Large Language Models

Authors: Chuofan Ma, Yi Jiang, Jiannan Wu, Zehuan Yuan, Xiaojuan Qi

Source and references: https://arxiv.org/abs/2404.13013

Introduction

In the fascinating realm of AI, the interaction between vision and language has spurred innovations that stride towards understanding our world as humans do. Enter "Groma" — a groundbreaking approach in Multimodal Large Language Models (MLLMs) making waves for its ability to not just see, but also precisely understand and describe localized visual details within images.

Keep reading with a 7-day free trial

Subscribe to State of AI to keep reading this post and get 7 days of free access to the full post archives.