LLaMA Pro: Progressive LLaMA with Block Expansion - AI Pulse

Improving AI Language Skills with CALM: Merging Specialized Models for Better Performance

Welcome to the 4th issue of AI Pulse. Our goal is simple: each issue will focus on breaking down one important AI or Machine Learning paper. We aim to provide clear, in-depth analysis so that our readers, whether they're professionals, academics, or enthusiasts, can easily understand key developments in the field.

Reading Time: ~ 5 Minutes

LLAMA PRO: Progressive LLaMA with Block Expansion

Authors: Chengyue Wu, Yukang Gan, Yixiao Ge, Zeyu Lu, Jiahao Wang, Ye Feng, Ping Luo, Ying Shan

Source and references: https://arxiv.org/abs/2401.02415

Introduction

In the world of natural language processing, large language models (LLMs) have made groundbreaking advancements, such as GPT-3 and LLaMA. However, as incredible as these models can be for general tasks, they tend to underperform in specific domains like programming, mathematics, biomedicine, or finance. Addressing this issue, the researchers behind LLAMA PRO: Progressive LLaMA with Block Expansion propose a new technique that allows these models to specialize in specific domains without losing their general abilities – a concept called block expansion.

Block Expansion Method

To understand the block expansion method, let's first look at how LLMs typically work. They are initially pre-trained on a massive corpus of general-domain text and then fine-tuned using domain-specific data. Researchers using LLMs usually customize them for their needs by injecting new knowledge and specialized abilities. However, this often results in "catastrophic forgetting" – the tendency of the models to lose their original capabilities when adapting to new tasks.

This is where the block expansion method comes in. By expanding the off-the-shelf pre-trained LLMs using copied Transformer blocks, the model gains the ability to learn new domain-specific knowledge while preserving its initial capabilities. This is achieved by fine-tuning only the added blocks while freezing the original ones.

The LLAMA PRO Model

Building upon the block expansion concept, the authors introduce the LLAMA PRO model. Initially, the researchers start with the LLaMA2-7B model – a powerful pre-trained LLM – and expand its transformer blocks from 32 to 40. This new model, dubbed LLAMA PRO (with 8.3 billion parameters), is then fine-tuned using open-source code and math data. The result is a versatile, multi-domain adept model that excels in general, mathematical, and programming tasks.

Evaluation and Benchmarks

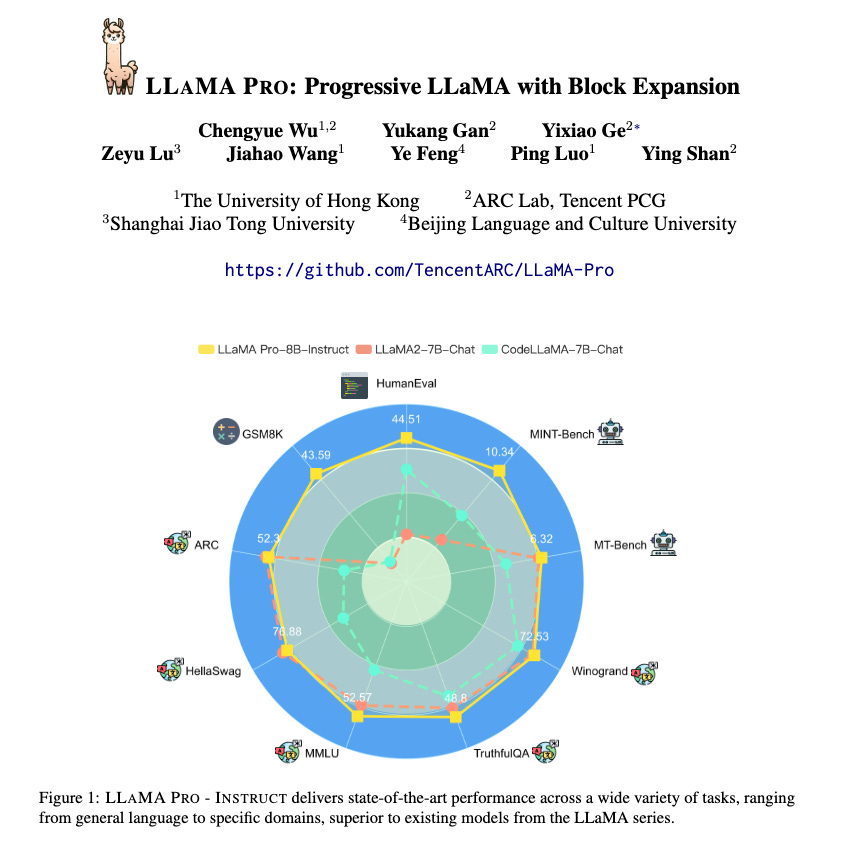

To demonstrate the effectiveness of the LLAMA PRO model and its instructional abilities, the researchers conducted various experiments across several benchmarks, including general language tasks, code-related tasks, and math tasks. They compared LLAMA PRO to other prominent models in the LLaMA family and found that it consistently outperformed its counterparts.

In general language tasks, such as HellaSwag and MMLU, LLAMA PRO displayed impressive results, achieving better scores than models like CodeLLaMA-7B, WizardCoder-Python-7B, and WizardMath-7B. Similarly, in code-related tasks like HumanEval and MBPP, LLAMA PRO showed strong performance, outclassing models like LLaMA2-7B-Chat and WizardCoder-Python-7B.

Additionally, the researchers explored LLAMA PRO's performance in tasks requiring tool usage and the capacity to ground in environmental and human feedback under benchmarks such as MINT-Bench and MT-Bench. The results showed that LLAMA PRO is indeed a versatile and powerful model that can adapt to various environments and tasks.

Implications and Extensions

The success of the LLAMA PRO model demonstrates the potential of combining natural and programming languages in AI-driven applications. This opens up exciting possibilities for the development of advanced language agents that can operate effectively in different environments.

By providing an efficient and effective post-pretraining method for LLMs, the block expansion technique can help researchers customize models according to their specialized requirements. As the field of natural language processing evolves, techniques like block expansion and models like LLAMA PRO can pave the way for more diverse, adaptable, and intelligent AI assistants that cater to a wide range of applications and challenges.