Contents

GeoCode-GPT: A Large Language Model for Geospatial Code Generation Tasks

Altogether: Image Captioning via Re-aligning Alt-text

Representation Shattering in Transformers: A Synthetic Study with Knowledge Editing

SELA: Tree-Search Enhanced LLM Agents for Automated Machine Learning

CLEAR: Towards Contextual LLM-Empowered Privacy Policy Analysis and Risk Generation for Large Language Model Applications

VILA-U: a Unified Foundation Model Integrating Visual Understanding and Generation

Prioritized Generative Replay

LongRAG: A Dual-Perspective Retrieval-Augmented Generation Paradigm for Long-Context Question Answering

Scaling Robot Policy Learning via Zero-Shot Labeling with Foundation Models

Scaling up Masked Diffusion Models on Text

Taming Data and Transformers for Audio Generation

Tuning-free coreset Markov chain Monte Carlo

PortLLM: Personalizing Evolving Large Language Models with Training-Free and Portable Model Patches

SkillMimicGen: Automated Demonstration Generation for Efficient Skill Learning and Deployment

GeoCode-GPT: A Large Language Model for Geospatial Code Generation Tasks

Authors: Shuyang Hou, Zhangxiao Shen, Anqi Zhao, Jianyuan Liang, Zhipeng Gui, Xuefeng Guan, Rui Li, Huayi Wu

Source and references: https://arxiv.org/abs/2410.17031v1

Introduction

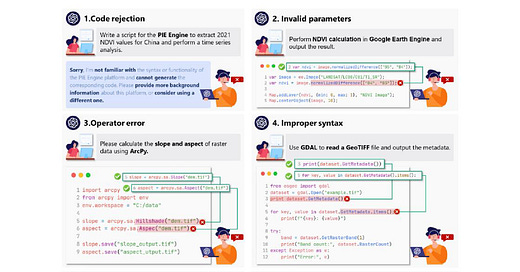

This paper presents GeoCode-GPT, the first large language model (LLM) focused on geospatial code generation tasks. The model aims to address the challenges faced by general-purpose LLMs in handling the complexities of geospatial code, such as specialized data formats, massive datasets, and unique platform-specific syntax and logic.

Key Points

Introduction of GeoCode-PT and GeoCode-SFT corpora, as well as the GeoCode-Eval evaluation dataset, to provide a systematic corpus foundation and evaluation tools for pretraining and fine-tuning LLMs in geospatial code generation tasks.

Proposal of a novel pretraining and fine-tuning strategy that combines QLoRA and LoRA to balance computational resources and training efficiency.

Establishment of a comprehensive evaluation framework for geospatial code, incorporating option matching, expert validation, and prompt engineering scoring for LLMs, providing insights for the holistic evaluation of domain-specific models.

Development and release of GeoCode-GPT-7B, the first LLM dedicated to geospatial code generation tasks.

Methodology

The researchers employed an autoregressive unsupervised approach to conduct lightweight pretraining on CodeLlama-7B using QLoRA, followed by supervised fine-tuning with instruction-tuning data via LoRA. This process led to the creation of GeoCode-GPT-7B.

Results and Findings

Experimental results show that GeoCode-GPT outperforms other models in multiple-choice accuracy by 9.1% to 32.1%, in code summarization ability by 1.7% to 25.4%, and in code generation capability by 1.2% to 25.1%. Despite having significantly fewer parameters than commercial models, GeoCode-GPT approaches their performance in certain metrics.

Implications and Conclusions

The development of GeoCode-GPT advances the application and development of LLMs in geospatial code generation, providing a solution and empirical validation for enhancing LLMs' performance in this domain. The research framework and findings offer valuable insights into unlocking the potential of LLMs in geospatial code generation tasks.

Altogether: Image Captioning via Re-aligning Alt-text

Authors: Hu Xu, Po-Yao Huang, Xiaoqing Ellen Tan, Ching-Feng Yeh, Jacob Kahn, Christine Jou, Gargi Ghosh, Omer Levy, Luke Zettlemoyer, Wen-tau Yih, Shang-Wen Li, Saining Xie, Christoph Feichtenhofer

Source and references: https://arxiv.org/abs/2410.17251v1

Introduction

This paper focuses on creating synthetic data to improve the quality of image captions. The key idea is to edit and re-align existing alt-text associated with the images, rather than generating captions from scratch, which often lack the nuanced information present in the alt-text.

Key Points

The paper presents a principled approach called Altogether to enhance caption quality by iteratively refining captions to better describe the visual content.

The approach involves human annotation where annotators start with the existing alt-text and re-align it to the image content in multiple rounds, constructing captions with rich visual concepts.

The paper introduces a parameter-efficient captioner that can generalize the process of re-aligning alt-texts at scale.

The synthetic captions generated by Altogether improve performance on various tasks, including text-to-image generation and zero-shot image classification.

Methodology

The authors leverage the insight that the creator who posts an image along with its associated alt-text is likely the most knowledgeable expert regarding the concrete visual concepts within that image. They use this idea to develop a two-pronged approach: (i) human annotation to create a fine-tuning dataset by iteratively refining alt-texts, and (ii) a parameter-efficient captioner that can re-caption billions of images by generalizing this process.

Results and Findings

The authors' evaluation shows that their re-aligned captions outperform alt-texts by 4% in CLIP score and outperform state-of-the-art captioners on a challenging test set. In text-to-image generation, using the synthetic captions improves the similarity between generated images and text prompts. For discriminative tasks, the synthetic captions lead to a 1.1% absolute accuracy improvement in zero-shot classification and a 3% gain on retrieval tasks.

Implications and Conclusions

The Altogether approach presents a principled and transparent way to enhance image caption quality, addressing the shortcomings of existing captioning models that often ignore the valuable information present in alt-texts. The authors' findings demonstrate the potential of their approach to improve various computer vision and multimodal tasks.

Representation Shattering in Transformers: A Synthetic Study with Knowledge Editing

Authors: Kento Nishi, Maya Okawa, Rahul Ramesh, Mikail Khona, Ekdeep Singh Lubana, Hidenori Tanaka

Source and references: https://arxiv.org/abs/2410.17194v1

Keep reading with a 7-day free trial

Subscribe to State of AI to keep reading this post and get 7 days of free access to the full post archives.