Contents

UrbanWorld: An Urban World Model for 3D City Generation

Open-Source Conversational AI with SpeechBrain 1.0

DINO Pre-training for Vision-based End-to-end Autonomous Driving

Spider2-V: How Far Are Multimodal Agents From Automating Data Science and Engineering Workflows?

SparQ Attention: Bandwidth-Efficient LLM Inference

DiagrammerGPT: Generating Open-Domain, Open-Platform Diagrams via LLM Planning

FabGPT: An Efficient Large Multimodal Model for Complex Wafer Defect Knowledge Queries

InterFusion: Text-Driven Generation of 3D Human-Object Interaction

Encapsulating Knowledge in One Prompt

sPhinX: Sample Efficient Multilingual Instruction Fine-Tuning Through N-shot Guided Prompting

UrbanWorld: An Urban World Model for 3D City Generation

Authors: Yu Shang, Jiansheng Chen, Hangyu Fan, Jingtao Ding, Jie Feng, Yong Li

Source and references: https://arxiv.org/abs/2407.11965v1

Introduction

This paper presents UrbanWorld, the first generative urban world model that can automatically create customized, realistic and interactive 3D urban environments with flexible control conditions.

Key Points

UrbanWorld is the first urban world model that can automatically create realistic, customized and interactive 3D urban environments.

UrbanWorld demonstrates superior generative ability to craft high-fidelity 3D urban environments, greatly enhancing the authenticity of interactions in the environment.

UrbanWorld is contributed as an open-source tool to benefit broad research communities, including AI agents and embodied intelligence, laying the groundwork for advancing AGI.

Methodology

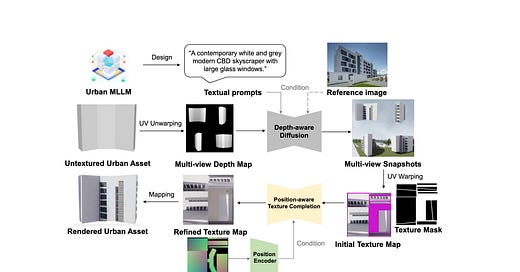

UrbanWorld introduces four key components:

OSM-guided urban layout generation: An automatic 2D-to-3D transformation module based on openly accessible OSM data.

MLLM-empowered urban scene design: Exploits the superior urban scene understanding ability of a trained urban MLLM to draft reasonable urban scenes.

Controllable diffusion-based urban asset texture renderer: A flexible urban asset rendering based on 3D diffusion following customized prompts.

MLLM-assisted urban scene refinement: A final reflection module to further improve the scene design.

Results and Findings

UrbanWorld outperforms existing methods on quantitative metrics, achieving 39.5% improvement on depth error, 8.3% improvement on homogeneity index, and 11.8% improvement on realistic score. The created urban environments exhibit high-fidelity textures and diverse visual characteristics, allowing for realistic interactions between agents and the environment.

Implications and Conclusions

UrbanWorld can facilitate the construction of diverse embodied urban environments with controllable and refined visual appearance, supporting the development of AI systems and advancing research towards Artificial General Intelligence (AGI).

Open-Source Conversational AI with SpeechBrain 1.0

Authors: Mirco Ravanelli, Titouan Parcollet, Adel Moumen, Sylvain de Langen, Cem Subakan, Peter Plantinga, Yingzhi Wang, Pooneh Mousavi, Luca Della Libera, Artem Ploujnikov, Francesco Paissan, Davide Borra, Salah Zaiem, Zeyu Zhao, Shucong Zhang, Georgios Karakasidis, Sung-Lin Yeh, Aku Rouhe, Rudolf Braun, Florian Mai, Juan Zuluaga-Gomez, Seyed Mahed Mousavi, Andreas Nautsch, Xuechen Liu, Sangeet Sagar, Jarod Duret, Salima Mdhaffar, Gaelle Laperriere, Renato De Mori, Yannick Esteve

Source and references: https://arxiv.org/abs/2407.00463v3

Introduction

This paper presents SpeechBrain 1.0, a significant milestone in the evolution of an open-source Conversational AI toolkit based on PyTorch, focused on speech processing tasks.

Keep reading with a 7-day free trial

Subscribe to State of AI to keep reading this post and get 7 days of free access to the full post archives.