AI Research Roundup: High-Resolution Speech, Bioacoustics, and Model Interpretability

Breaking Down This Week's Most Impactful Papers in AI: From Earth Observation AI to Trustworthy Large Language Models

Contents

Wave-U-Mamba: An End-To-End Framework For High-Quality And Efficient Speech Super Resolution

BirdSet: A Large-Scale Dataset for Audio Classification in Avian Bioacoustics

Reinforcement Learning with Model Predictive Control for Highway Ramp Metering

Prithvi-EO-2.0: A Versatile Multi-Temporal Foundation Model for Earth Observation Applications

GIFT: A Framework for Global Interpretable Faithful Textual Explanations of Vision Classifiers

E2Former: A Linear-time Efficient and Equivariant Transformer for Scalable Molecular Modeling

Tazza: Shuffling Neural Network Parameters for Secure and Private Federated Learning

DeTrigger: A Gradient-Centric Approach to Backdoor Attack Mitigation in Federated Learning

SELMA: A Speech-Enabled Language Model for Virtual Assistant Interactions

Trust-Oriented Adaptive Guardrails for Large Language Models

The "Huh?" Button: Improving Understanding in Educational Videos with Large Language Models

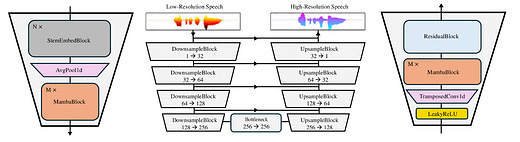

Wave-U-Mamba: An End-To-End Framework For High-Quality And Efficient Speech Super Resolution

Authors: Yongjoon Lee, Chanwoo Kim

Source and references: https://arxiv.org/abs/2409.09337v3

Introduction

This paper introduces Wave-U-Mamba, an end-to-end framework for high-quality and efficient speech super-resolution (SSR), which aims to enhance low-resolution speech signals by restoring missing high-frequency components.

Key Points

Wave-U-Mamba directly performs SSR in the time domain, as opposed to conventional approaches that reconstruct log-mel features and then use a vocoder to generate high-resolution speech.

Keep reading with a 7-day free trial

Subscribe to State of AI to keep reading this post and get 7 days of free access to the full post archives.